Sorry the video's not the best, all I had was CamStudio, but below are all the instructions needed to demo on my lab computer

Actual file hosted on website (815MB): http://

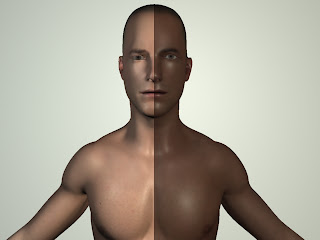

To Demo shader as seen in video:

In python script line type "import shader"

Add the appropriate files (C:\Kaitlin\SeniorProject\

Choose "Create Shader" -> shader created with name "skinOver"

Apply to object -> Right Click -> Apply Existing Material -> skinOver

If you added displacement this can be adjusted (no error checking at this point, so if no displacement file was selected please don't click)

To apply exhaustion progression -> Exhaust

Images for Color, Epidermal, Subdermal, Backscatter will be shown if selected

3 clicks, choose eyes and mouth -> hit Enter -> there will be a rather long wait as the files are generated

Repeat for remaining file that pop up

File ready (for demo purposes only every 10th frame created, so please view 0,10,20,etc -> 490)

To Demo sweating

Open Maya

Select faces that you want to define the surface emitter

In python script line type "import sweat"

Wait

Hit Play

Code:

C:\test

C:\Users\Kaitlin\Documents\

Files:

C:\Kaitlin\SeniorProject